Shingling of Documents

Contents

Shingling of Documents#

In natural language processing, shingling is a set of unique shingles or n-grams composing of contiguous subsequences of tokens within a document, which can be used to determine the similarity between documents [SchutzeMR08]. In this case, the documents that shares pieces of short sentences or phrases will contain many common elements in their representation if the sentences appear in different order in the two documents.

\(k\)-shingles#

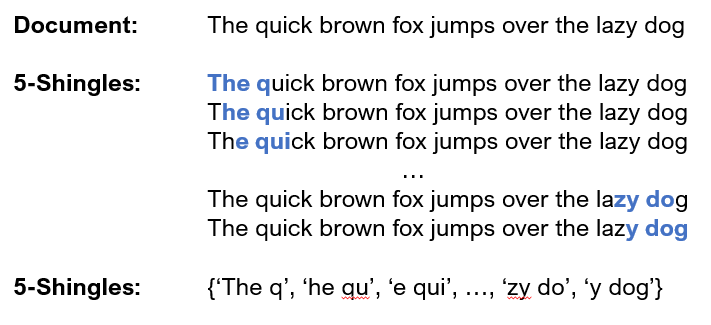

The simplest way to do shingling is by enumerating all the \(k\)-shingles of a particular document. This refers to any substring with length \(k\) found in the document (See Fig. 3).

Fig. 3 5-shingles found from the sentence “A quick brown fox jumps over the lazy dog”.#

Here, notice that the shingles found include the empty space represented as a single character. We have the freedom to perform any preprocessing that we deem important prior to extraction of the shingles. Commonly, we may compress newline characters or tabs as a single space for simplicity.

Example 1#

Suppose that in a document \(D\) contains a substring ilovedove. Pick the \(2\)-shingles of \(D\) by performing the \(k\)-shingling extraction algorithm.

Solution#

Picking the \(2\)-shingles of \(D\), we will get:

{il, lo, ov, ve, ed, do}

Notice that although substrings ov and ve appears twice, it only appears once as a shingle. Another variant of doing shingling is to construct a bag of shingles where each shingle would appear in the result as many times as it appears in the document.

Choosing the Shingle Size#

Choosing the \(k\) depends on the typical length of documents and how large the set of typical character is. Usually for corpus of documents containing emails, \(k = 5\) is a good choice. For larger documents, e.g. research articles, the choice of \(k = 9\) is workable.

To imagine the effect of the chosen \(k\) in shignling is to imagine how many possibilities the choice \(k\) creates. For example, in the English alphabet, there are only 26 \(\sim\) 20 characters, thus for a given \(k\)-shingles, there would be an estimate number of \(20^k\) shingles. For our email example, this would correspond to \(20^5 = 3,200,000\) possible shingles. Most emails are typically shorter than 3.2 million characters, as such, \(k = 5\) is a workable value for such assplications.

Extracting the \(k\)-shingles#

We can use the function k_shingles() from the alis.feature_extraction library to extract k-shingles from any given text.

from alis.feature_extraction import k_shingles

input_text = 'The quick brown fox jumps over the lazy dog'

shingles = k_shingles(input_text, 5) # Extract 5-shingles of the input text

print(sorted(shingles))

[' brow', ' fox ', ' jump', ' lazy', ' over', ' quic', ' the ', 'The q', 'azy d', 'brown', 'ck br', 'e laz', 'e qui', 'er th', 'fox j', 'he la', 'he qu', 'ick b', 'jumps', 'k bro', 'lazy ', 'mps o', 'n fox', 'over ', 'own f', 'ox ju', 'ps ov', 'quick', 'r the', 'rown ', 's ove', 'the l', 'uick ', 'umps ', 'ver t', 'wn fo', 'x jum', 'y dog', 'zy do']

Notice that from the above example and the exercise that we discussed a while ago, the number of shingles would be insurmountable even for a moderate size of collection of documents. As such, instead of using substrings directly as shingles, we may pick a hash function that maps the shingles to some number of buckets that treat the resulting bucket number as a shingle.

We can do this via the hashed_shingles() feature extractor from the alis.feature_extraction library.

from alis.feature_extraction import hashed_shingles

print(hashed_shingles(input_text, k=5, n=10))

{1, 643, 259, 262, 8, 651, 780, 531, 659, 19, 147, 158, 802, 932, 808, 427, 817, 51, 565, 311, 317, 450, 708, 838, 588, 849, 466, 83, 86, 857, 350, 226, 483, 360, 1002, 1004, 497, 245, 254}

Here, k denotes the \(k\)-shingle, and n represents the resulting bucket size \(2^n - 1\). The larger our choice for \(n\), the larger the storage requirement, but lesser collisions will be experienced.

Shingles Build from Words#

Another way to define shingles is to exploit some property in the document that allows us to focus an interesting property of the document. For instance, we can define a shingle to be a stop word followed by the next two words, regardless of whether they were stop words or not. This not only reduces the storage space required for us to store a given particular document, but allows us to focus on the important parts of the document.

This allows us for example to distinguish important parts of news articles as compared to let’s say those that appear as an advertisement in a web page. This biases the set of shingles extracted in favor of the article and differentiates it with the surrounding material, which make our Jaccard similarity operation perform better and focus on the article itself rather than some advertisement or surrounding material.

We can extract the word shingles from a given string of text using the word_shingles() and hashed_word_shingles() from the alis.feature_extraction library. Here, we may optionally input a collection of stop_words. We then define a shingle to be a stop word followed by the next k-1 words regardless of whether the next words were stop words or not.

from alis.feature_extraction import word_shingles, hashed_word_shingles

word_shingles extracts the next k-1 words given that the first word encountered is a stop word.

print(word_shingles(input_text.lower(), 3, stop_words=None))

{'over the lazy', 'the lazy dog', 'the quick brown'}

We may use hashed_word_shingles to extract the shingles in hashed representation form.

print(hashed_word_shingles(input_text.lower(), 3, 32, stop_words=None))

{1785721545, 2800494314, 331608684}

Exercises#

For the exercises, let’s consider an excerpt from Pablo Neruda’s poem I Do Not Love You Except Because I Love You

I do not love you except because I love you;

I go from loving to not loving you,

From waiting to not waiting for you

My heart moves from cold to fire.

Exercise 1#

Treat each newline character as a single space, then extract the \(5\)-shingle of this excerpt. Compute for both the non-hashed and hashed shingle representation of the excerpt.

Exercise 2#

Perform a preprocessing step of case normalization and removal of special characters then scan each line in the poem separately. Extract the \(3\)-word shingle of this excerpt. Solve for both the non-hashed and hashed word shingle representation of the excerpt.